Mobile quality is the largest challenge mobile developers will encounter, with devs dealing with app complexity, device fragmentation, fast release cycle, short user sessions and higher user expectations for mobile applications. In this article, Sean Sparkman walks you through some of the basics of automated UI testing.

Gone are the days of single-screen mobile applications—applications are becoming more and more complex with each passing year. These complex features need to be tested. Existing features need to be retested even when making a minor change to another part of the application. This can take valuable time away from development and draw out release times.

In consumer mobile applications, users want to be able to open a mobile app and quickly complete a task. If users cannot start their task within three seconds of pressing the app icon, they will find another application. If an app developer finds a good idea, it will not be long before someone else copies it.

Everyone knows about version fragmentation on Android. Certain manufacturers do not let you upgrade to newer version of Android. Oreo only has a 12.1% adoption rate as of July 23, 2018, according to Google. This is one year and four months after being released.

Version | Codename | API | Distribution |

2.3.3-2.3.7 | Gingerbread | 10 | 0.2% |

4.0.3-4.0.4 | Ice Cream Sandwich | 15 | 0.3% |

4.1.x | Jelly Bean | 16 | 1.2% |

4.2.x |

| 17 | 1.9% |

4.3 |

| 18 | 0.5% |

4.4 | KitKat | 19 | 9.1% |

5.0 | Lollipop | 21 | 4.2% |

5.1 |

| 22 | 16.2% |

6.0 | Marshmallow | 23 | 23.5% |

7.0 | Nougat | 24 | 21.2% |

7.1 |

| 25 | 9.6% |

8.0 | Oreo | 26 | 10.1% |

8.1 |

| 27 | 2.0% |

Device fragmentation further complicates this issue. There are six different screen densities on Android. In this ever-growing device market, you need to be able to test your application on more than a personal device.

Web applications have more leeway when it comes to users. Users expect more from their mobile applications. Slow load times and performance for web applications is often chalked up to slow network speeds. Since mobile applications are installed on the device, users expect the application to perform faster. Even without a network connection, the app must still work—even if just displaying an error message. This is actually tested during Apple App Store Review. No network connection is commonly tested, but slow connections are not. The user interface must still be responsive while pulling data from an API even with sluggish connection.

Testing applications under slow and no connection conditions is a good first step. The next step is error path testing. Handling error conditions can make or break an application. Crashing is a major no-no. Unhandled errors in a web browser will typically not crash the browser window. Native applications crash on error, full stop. If the API is up but the database is down, it may cause unexpected errors. Many users will stop using or even uninstall an application if it crashes. If the user is frustrated enough, they will write a review with low or no stars. Low-star reviews are very difficult to come back from. The most common error I have seen is when a developer accesses a UI element from a background thread. On an iOS simulator, nothing will happen. The UI element will not update, and no errors will be thrown. However, when executing on a physical device, it will cause a crash. If the developer only tests on simulators, they will never discover their critical error.

The Various Ways of Testing Applications

There are several different ways to test an application. Beta testing with users is great in that it tests with real users, but it is difficult getting good feedback. Manual testing is very slow, limits the number of devices you can use and is not a realistic test. Unit testing should always be done, but it should be done in addition to integrated testing and is far from realistic.

So how do we test quickly, on a broad set of devices, and with every release? Automated UI testing is the answer. My preferred toolset for automating UI testing is Xamarin.UITest. It can be quickly and easily written with C#. Tests can tap, scroll, swipe, pinch, enter text and more inside a native application, regardless of whether it’s Xamarin or not. These tests can then be run locally on simulators and devices. Once the application is ready to be tested against a broader set of physical devices, these same tests can be run inside of Microsoft’s App Center against most popular devices on a number of a different versions of iOS and Android.

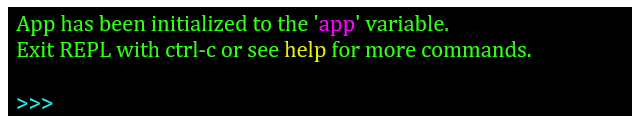

The best way to get started writing UI tests is with Xamarin.UITest’s built in REPL. If you’re not familiar with REPL, it’s an acronym that stands for Read-Evaluate-Print-Loop. REPL is a great way to start learning a new language or new framework. It allows you to quickly write code and see the results. Xamarin.UITest’s REPL can be opened by calling app.Repl().

[Test]

public void ReplTest()

{

app.Repl();

}

Running a test with the REPL method call will open a console. The console is equipped with auto-complete. Developers can work through the steps inside the console to test their application. Once done with a test, the copy command will take all the steps used into the REPL and place them into the clipboard. Finally, the developer can take the copied commands and paste them into a new test method in their UITest project.

The application’s UI elements can be examined by calling the tree command inside of the console. This will display a list of elements with their children. Elements can be interacted with from the console using commands like Tap and EnterText. When writing a test, WaitForElement should be called. This will cause the test to wait for the specified element to become available. When automated, it is necessary to wait for the screen to load, but it won’t be when using the console.

Elements are referenced by using the Marked or Query methods. These methods rely on the id field for iOS, label for Android, and finally the AutomationId for Xamarin.Forms. When using Xamarin.Forms, the same UITests can be used for Android and iOS if there aren’t too many platform specific tweaks.

Once a test is setup and any necessary steps performed, the Query method can be executed to gain access to elements on the screen. At this point, values and displayed text are tested to see the results of the test. This would be performed like any other unit test. Xamarin.UITest is running on top of NUnit 2.6 and asserts are available inside of test methods.

[Test]

public void ReplTest()

{

app.WaitForElement("FirstName");

app.EnterText(a => a.Marked("FirstName"), "Sean");

app.EnterText(a => a.Marked("LastName"), "Sparkman");

app.DismissKeyboard();

app.Tap(a => a.Marked("OnOff"));

app.Tap(a => a.Marked("Submit"));

app.WaitForElement("Result");

var label = app.Query(a => a.Marked("Result"));

Assert.AreEqual(1, label.Length);

Assert.AreEqual("Success", label[0].Text);

}

A great example of a test to automate is registration. This workflow should be tested with every version, but a manual test should not be necessary. A developer could create multiple successful and failing tests of registration. Failing tests could include when not connected to the internet, using invalid data in fields or trying to register as an existing user. These are important to test with each release but take up valuable time that could be spent on testing new features.

Once written, UITests can be run locally with either a simulator, emulator or physical device that is plugged into the executing computer. However, this does limit the number of devices that these tests can be run against. The next step is to upload to Microsoft’s Visual Studio App Center, which increases the number of devices tested on. Once uploaded, the devices used for the tests can be selected. Microsoft allows for testing on thousands of iOS and Android devices in the cloud. Each step along the way can be documented visually with the Screenshot method. Screenshot allows for a string to be provided with a meaningful name for the screenshot. This method can be works locally as well to provide a view into what is happening with the tests.

Even if Xamarin.UITest is not used, best practices should include using an automated UI testing framework. Regression testing and testing on multiple devices should always be done when working in mobile development. The mobile app stores are a highly competitive space. Developers need to be able to move quickly and respond by releasing new versions with new features to be competitive. Automated testing allows programmers to push forward with confidence and speed without compromising on quality, because quality is king.